Just utter the phrase “HA/DR” in any IT forum and, without exception, you’ll get 10 different passionate views usually spanning 15+ years of technology depending on the last time the attendees touched a console. Out of all the workshops I run, this is always the one that calls on educational, technical, and UN Peacekeeper skills in equal measures.

Below are a few facts:

- It remains one of the most misunderstood topics in a cloud environment which, when coupled with business requirements evolved from physical “hosting” DNA, results in huge overspending.

- Conversely, it’s an area we also commonly see massive shortcuts being made to create low price points. The cost may be lower, but it puts you in the ironic position of having a technically higher risk solution on an SOW “insurance policy”. Spinning the roulette wheel on your recovery metrics is a high-stakes game.

- There is a tendency to over-engineer business continuity designs. Keeping it simple and cloud-native usually means there’s less to go wrong.

Here are my top 4 points to consider when dealing with business continuity architecture:

1. Shortcuts come with a (recovery point) health warning

Over the years, we have seen customers struggle with large price variations in responses. One of the culprits here is often the approach to business recovery as it is full of tempting commercial shortcuts to offer that winning price-point. Here are some recent real-life examples:

| # | Compromise | Impact |

| 1 | Stacking Dev, QA DB on the same EC2 instance | Unable to scale independently. Cross version dependency. 2x Maintenance downtime impacts. |

| 2 | Stacking App Servers/DB on the same Prod EC2 instance | Single Point of Failure on 1x EC2, unable auto-scale / separate Apps |

| 3 | “Sacrificial” QA box for production failover | HA event removes production release path. DB load time can be significant (RTO), failback highly complex, compromised resolution path. Can be quite a manual process. |

| 4 | No cross-regional resilience | While unlikely, relying on single-region storage does not protect from a full regional outage, or more likely, account compromise. |

| 5 | Under-sizing (AWS Compute is a “more powerful” myth) | Untrue: SAP provides the SD2Tier benchmark, certified SAPs, and HANA sizing. Only do this if you can downsize. If you size below SAP EWA reported sizing, expect an impact! |

| 6 | Technical Debit assumptions (Delete ~10% of DB to squeeze that DB into a cheaper HANA VM) | Make sure that is functionally validated. If the data volumes are bigger than the appliance the DB will not be fully loaded into memory on HANA resulting in large performance deterioration. |

| 7 | Snapshots only for Recovery images | Lower resolution Recovery Point images should always include Logs and ideally use AWS validated tools such as AWS Backup + BackInt. |

| 8 | No OS clustering, EC2 restart is fine | EC2 restart is not SLA assured, plus SAP (especially HANA) takes time to memory load making this unsuitable for production RTO and performance as a single mechanism. |

| 9 | Storage striping, reduced volume sets | Common shortcut. Incorrect disk stripping = large performance impacts across dialogue, batch, and peak processing. You need a performing storage strategy – make sure it passed SAP TDI testing, it’s free, easy, and provides SAP performance assurance. |

| 10 | Manual Failover required with aggressive RxO | Simply put, the more aggressive the SLA & RxO the less time for human hands. 99.9% and above we believe always requires clustering and auto-failover mechanisms. |

2. Don’t flood fill the SLA requirement column

Production = 99.95%. In almost all cases organizations provide a “Production” SLA as part of their requirements. While this has been the “Norm” in the IT industry since the inception of SLAs, it’s also a very rigid way of architecting:

We always recommend using a per application business driven recovery as:

- In an agile cloud environment, you can have different business recovery metrics per application.

- Flood filling SLAs across landscape tiers always results in higher pricing.

- It’s an ‘Un-Cloudy” way of architecting, the technology can provide granular design, why block it?

- Many of the components of SAP Cloud architecture offer high uptime and are often serverless.

- Original constraints of needing high levels of “physical” redundancy do not apply to Cloud.

- It’s not all or nothing. Applications can invoke HA or even DR independently from each other.

In a recent large retail customer, which contained a quite large landscape of 49 SIDs in the production landscape by adopting a granular architecture (which used 3 different patterns), we removed $400k /year from the “Flood fill” version of the same bill of materials.

3. Think Recovery point/time objective, not SLAs

Our industry is fixated on SLAs, unhealthily so on occasions. SLAs are usually interpreted as unplanned downtime per month, but Recovery Point and Time metrics (the amount of data you can sustain losing and return to operations time) are the important data points here as they unlock the ability to adopt fine-grain recovery patterns.

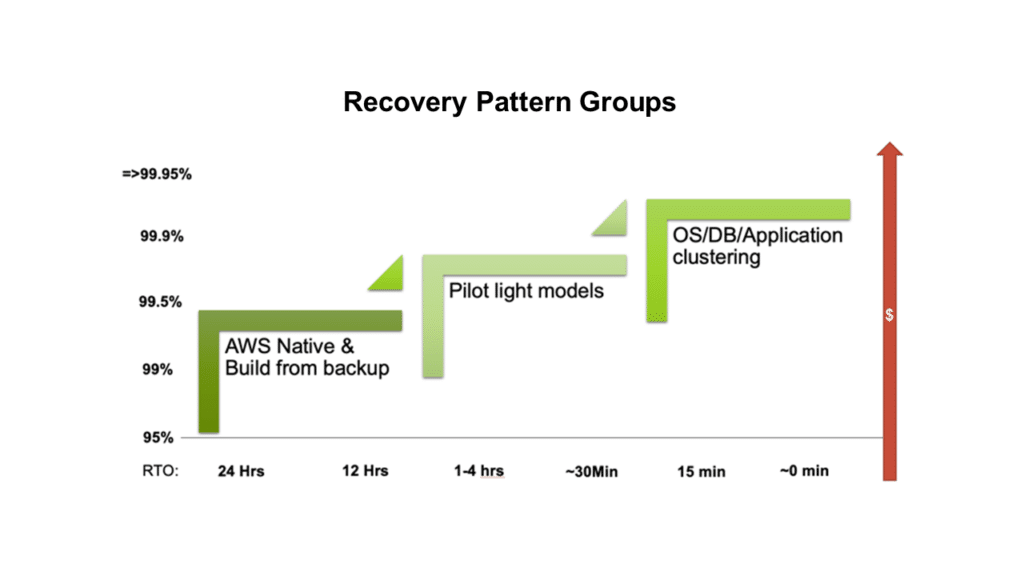

At a high level, there are 3 main categories:

- Application restart or building from system images.

- Pilot lights where secondary systems automatically start up on a schedule, synchronize then go back to “sleep” to save costs.

- Active clusters where no data can be lost.

There are a whole family of patterns within each of these areas, but it’s the RTO/RPO that really drives their use and the associated cost. Back to Point 2, selecting a combination of Pilot Lights and full clusters (usually for systems of record) is a proven way to optimize pricing.

4. Know the science

Be a science-first organization. Knowing that the science of the solution being presented to you will meet your recovery targets is very important. It’s the difference between knowing why a deal that seems too good to be true probably is, versus being woken up by a P1 at 3 am (we’ve all been there ) followed closely by a CIO summons to explain “What the hell… ?”

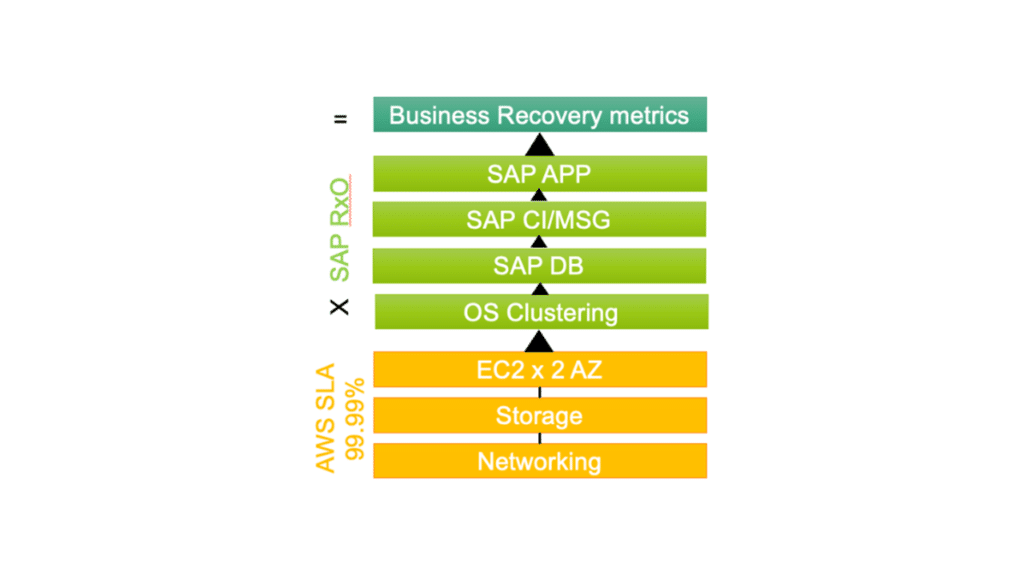

Simply put, if any one of the layers in the architecture is unbalanced, then the SLA is an “insurance policy” not a well-architected design. I like to think of it as:

Overall uptime & recovery = AWS Region x EC2 SLA x Storage SLA(s) x OS up x DB Up x SAP CI/MSG + App.

With this in mind, you can look at each layer in the architecture and determine if there is a failure point. This is a topic that is easier to describe through a picture:

5. Hang on. That’s HA not DR

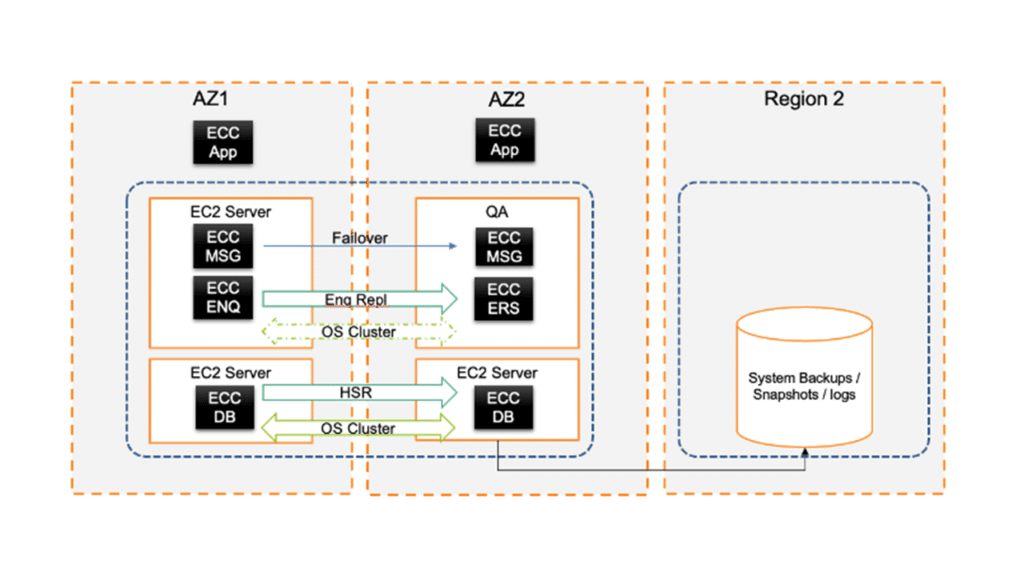

This topic is a big can of worms. If my HA system is in a secondary AWS Availability Zone, is data synchronous, is geographically isolated, and is capable of running production operations, if anything in the primary site goes wrong, is it also DR?

The answer is– it all depends on your originations risk perspective. I’d recommend using AWS Well-Architected best practice, clearly articulated to your vendors, or you will end up with mixed architecture interpretations of risk, SLA, and recovery (and usually some of those earlier shortcuts).

At Lemongrass, our thinking is that a regionally resilient design is more than sufficient for most organizations. We couple this with just the backup images/snaps, etc., being replicated into a second region “just in case“ so we have an image from which to rebuild the landscape if a meteoric event strikes. It’s the “just right” sweet spot, or commercial Goldilocks zone, between too much (compute) and too little (+risk), plus it protects against root account compromise in a public cloud.

I’m sure you will have lots of views and ideas based on your history, experience, and industry and will likely both agree and disagree on many points which is exactly what makes this topic a great debate. I’m also 99.9% sure we’ll still discussing this in years to come.

Recommendations

The Downtime Dilemma | Symphonic IT | Innovation News: April 2021